Heat maps are among the most widely used—and debated—tools for risk managers worldwide to communicate risks in their registries or project portfolios. Despite their popularity, we advise leaders seeking transparency in discussing risk and value to avoid relying on them.

What are heat maps?

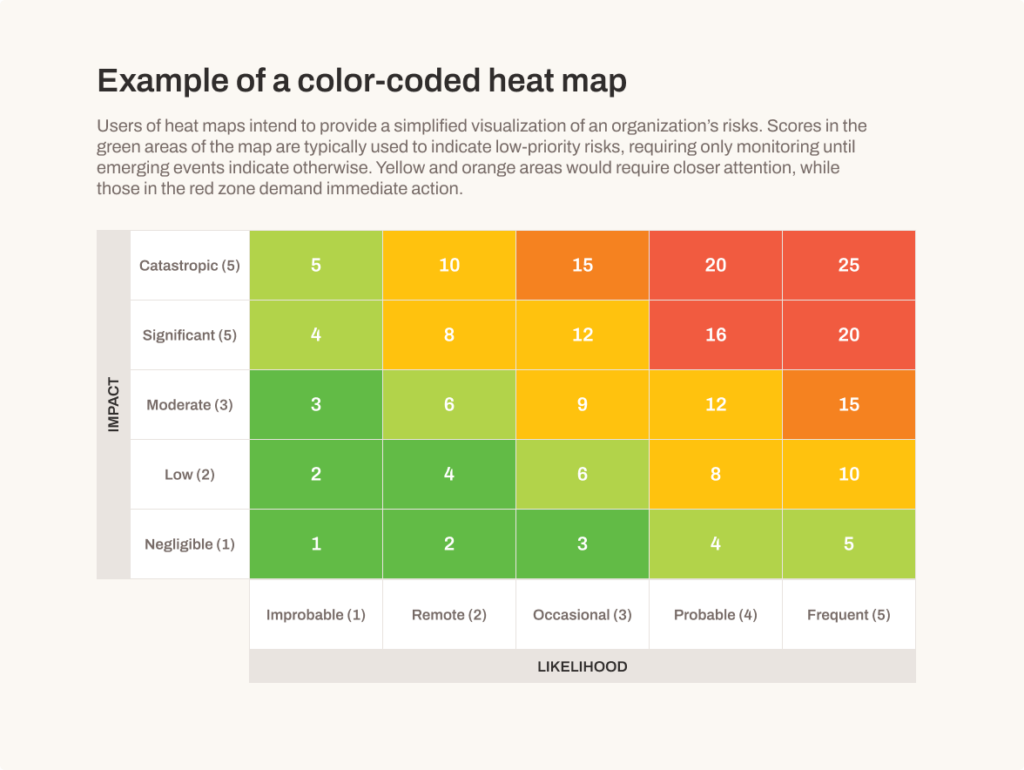

Risk managers often use heat maps (or risk matrices) to represent risk assessments of events in a company’s risk registry. Teams responsible for risk management construct heat maps as a matrix, with likelihood on one axis and impact on the other. Both likelihood and impact of risk register events are usually represented on a scale of 1–5.

Risk managers then map events into the matrix framework according to events’ ordered pair of likelihood and impact. They prioritize events deserving the most attention based on their degree of “heat,” calculated as the product of their likelihood and impact.

Therefore, for example, an event with a heat of 25 (Catastrophic impact = 5, Frequent likelihood = 5) deserves more attention than those with a heat of 9 (Moderate impact = 3, Occasional likelihood = 3), and much more attention than those with a heat of 1 (Negligible impact = 1, Improbable likelihood = 1).

Why heat maps are ineffective

This may seem like a straightforward practice to represent and communicate event risks to support risk management decisions. However, heat maps carry several limitations that make them ineffective for risk management.

Oversimplification

Heat maps reduce complex risk assessments to a simple, two-dimensional representation, losing nuance and context.

Subjective scoring

Scoring risks based on likelihood and impact is often subjective and follows no particular process or criteria, leading to inconsistent and biased assessments. The assignment of values in the heat map often occurs in a BOGAT (Bunch Of Guys At a Table) meeting. Unfortunately, the implicit motivated reasoning of those meetings is that budget requirements guide their assessment of risk.

Lack of transparency

Heat maps don’t provide clear explanations for the scores or the underlying assumptions. This makes it difficult to understand the reasoning behind the risk assessment. For example, why should a given impact be assigned a 5 rather than a 4? Usually, we aren’t given the model (if one exists) that assigns these values.

Inability to capture dependencies

Heat maps typically don’t account for relationships between risks. This can lead to a lack of understanding of how risks interact and compound.

Static representation

Heat maps are often static, failing to reflect changes in the risk landscape over time. For applications to cybersecurity, this problem and the prior one, amplifies due to the effect of the primary source of risk—threat actors—who learn and evolve in response to the learning and defensive evolution of companies protecting their corporate crown jewels.

Vague timescales, units, and meanings

Heat maps don’t specify the window of time in which risk management teams are concerned that risks are likely to materialize. Using terms like “Frequent” or “Occasional” should necessarily have units like [events/unit time], but terms like “Improbable,” “Remote,” and “Probable” imply the probability of occurrence in a known unit of time.

Unfortunately, risk teams often mix the use of these terms in the category names making understanding the actual probability of an event difficult to understand. Furthermore, the descriptive terms for likelihood do not enjoy universally consistent usage. What one person deems “Probable,” another might actually consider “Remote.”

Lack of impact range

A single number represents the impact score, concealing the uncertainty of specific event outcomes that cannot be known in advance. Therefore, the most accurate way to represent an event impact is with a range.

Moreover, the range of outcomes can cover multiple levels on the impact index, often encompassing a broad spectrum. It’s often unclear which statistical measure (such as the median, average, or 95th percentile) the impact score represents.

Relying on a central tendency like the average exposes the organization to the “Flaw of Averages,” while using an extreme value alone risks overstating the organization’s exposure. Using single point values distorts the guidance risk management teams need to ensure effective treatment of risks.

Mathematical inconsistency

Heat maps fail to communicate risk in a quantitatively coherent and logically consistent way. First, in the heat map, likelihood serves as a simplified proxy for the probability of occurrence. Although probability is defined as a real number within a bounded range of 0–1, the heat map represents likelihood using bounded integer values from 1 to 5.

Second, the heat map represents impact using ordinal values. Ordinal values are categorical in nature, and simply tell the order of importance or “place in line” of a category, but they do not tell the distance between the categories. Consequently, the meaning of an impact of 3 doesn’t communicate how much worse it is than a 1 or 2, nor does it communicate how much better it is than a 4 or 5. And we don’t know if some impacts should be given a score of 6 or 12 if they should be considered worse or much worse than a 5. Impacts do not materialize in real life as ordinal values, nor do they occur on a strictly bounded range of ordinal values.

Third, while the values given in the heat map look like numbers that we can treat with simple arithmetic to calculate the heat of a risk, they aren’t in the same category of numbers that allows that kind of operation. Multiplying an integer likelihood by an ordinal impact violates the fundamental rules of mathematics. Doing so is like multiplying apples by chihuahuas, a nonsensical operation.

Difficulty in priortization

Heat maps can make it challenging to prioritize risks, as the scoring system may not accurately reflect the organization’s specific risk tolerance and priorities. The issue intensifies when we assign an “average” score of 3 to an impact, despite its potential severity extending to a 5.

This misalignment can lead to both overestimating and underestimating the true degree of risk. As a result, effective prioritization becomes nearly impossible, leading to a distorted view of which risks require attention.

Lack of actionable insights

Relying on heat map values to approximate risk-adjusted impacts prevents making financially informed trade-off decisions. This approach fails to produce results aligned with accepted mathematical rules or in financial units, making meaningful comparisons among risk events impossible. And since they also do not account for the cost or investment needed to make any risk management decisions, the result is that heat maps don’t provide clear recommendations for risk transfer, mitigation, or remediation.

In response to these criticisms, some have suggested that the heat map is “just a model,” and that “all models are wrong, but some are useful.” Thus, they maintain, the heat map is a coarse but useful tool.

However, in order for a model to be useful, it needs to provide guidance that is actually useful. Research by Tony Cox [1, 2], Douglas Hubbard[3, 4], and others[5] have shown that heat maps are less than useful; that is, they provide guidance that steers decision makers toward decisions that violate well-known principles of good decision making practice, destroying company value as a result. Combining those insights with the mathematical inconsistencies described above suggests that heat maps are a model that do not even rise to the level of being wrong.

A better way

A high quality risk assessment possesses several defining features. Our approach includes transparent criteria for measuring uncertain events in terms of their arrival rate and impact, a clear timeline for when concerning events might occur, mathematically consistent terms in risk evaluation, explicit communication of impact ranges, and a direct connection to risk mitigation or transfer decisions that should follow.

Heat maps don’t provide these features. The Resilience solution does. If you are interested in learning about more instructive ways to manage and communicate the risk of your current cybersecurity risk surface, reach out to us and we’ll show you a better way.

Sources:

- Cox Jr., L.A., Babayev, D., and Huber, W. 2005. Some limitations of qualitative risk rating systems. Risk Analysis 25 (3): 651–662. http:dx.doi.org/10.1111/j.1539-6924.2005.00615.x.

- Cox Jr., L.A. 2008. What’s Wrong with Risk Matrices? Risk Analysis 28 (2): 497–512. http://dx.doi.org/10.1111/j.1539-6924.2008.01030.x.

- Hubbard, D.W. 2009. The Failure of Risk Management: Why It’s Broken and How to Fix It. Hoboken, New Jersey: John Wiley & Sons, Inc.

- Hubbard, Douglas & Evans, Dylan. (2010). Problems with scoring methods and ordinal scales in risk assessment. IBM Journal of Research and Development. 54. 2. 10.1147/JRD.2010.2042914.

- Thomas, Philip & Bratvold, Reidar & Bickel, J.. (2013). The Risk of Using Risk Matrices. SPE Economics & Management. 6. 10.2118/166269-MS.